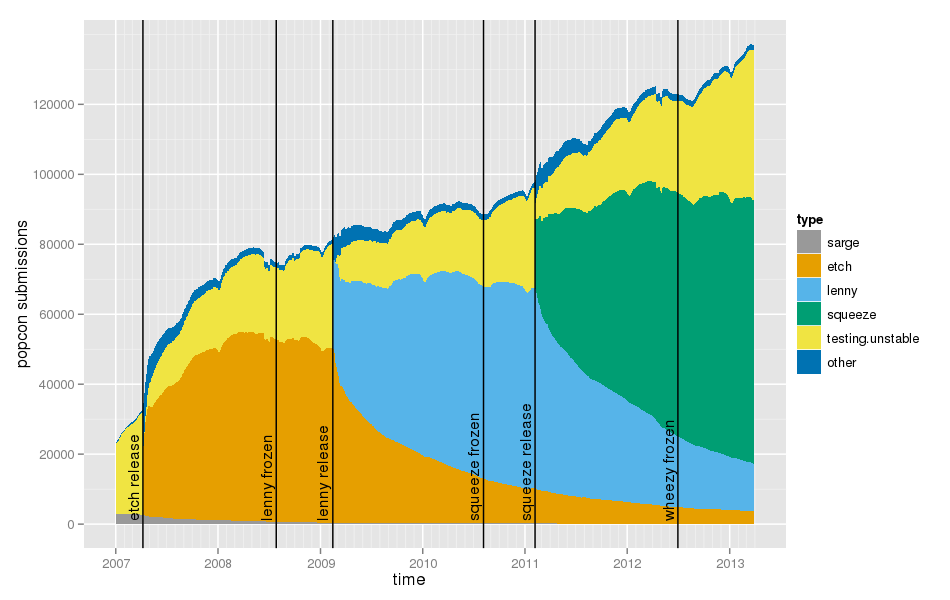

The graph below is generated from popcon submissions. Since they include the version of the popularity-contest package, one can determine the Debian release that was used by the submitter (a new version of the popularity-contest package is generally uploaded just after the release to make that tracking possible).

The graph is similar to the one found on popcon, except that versions newer than the latest stable release are aggregated as “testing/unstable”.

Comments:

- Popcon submitters might not be representative of Debian users, of course.

- There’s quite a lot of testing/unstable users, and their proportion is quite stable:

- Today: testing/unstable: 43119 submissions (31.4%); squeeze: 75454 (54.9%); lenny: 13603 (9.9%)

- 2011-02-04 (just before the squeeze release): testing/unstable: 29012 (29.7%); lenny: 57262 (58.6%); etch: 10032 (10.3%)

- 2009-02-13 (just before the lenny release): testing/unstable: 30108 (36.9%); etch: 49996 (61.2%)

- I don’t understand why the number of Debian stable installations does not increase, except when a new release is made. It’s as if people installed Debian, upgraded directly to testing, and switched back to tracking stable after the release. Or maybe people don’t update their systems? A more detailed analysis could be done by looking at the raw popcon data.

- Upgrading to the next stable release takes time. Looking at the proportion of users still using oldstable one year after the release, it would be better not to remove oldstable from mirrors too early.

Scripts are available on git.debian.org.

All I can speak to is what I do.

With my desktop, I run unstable.

With servers I run stable unless the next stable release looks to be less than six months out, in which case I run testing via the codename of the next release. I then rarely update to the next release. I still have some etch servers. They’re all due for a migration to VMs running wheezy, though.

I haven’t upgraded mainly because of the PHP4 to PHP5 transition and not having time to fix existing code.But now the hardware is failing and I don’t have a choice. :(

Michael

I think that when you start a new project that needs a server, you end up using testing because it contains the most recent soft/lib and you need them. When the project ends you don’t need anymore to upgrade your requirement, so stable is fine.

I think there are a very few projects that initially target the software available in stable and rather target the one in unstable (more or less what you can find in testing).

It looks to me that the number of Stable Squeeze installations is increasing. It appears to be increasing at the same rate that Lenny and Etch installations are decreasing. Making it appear that people are slowly rolling previous releases forward to the current release.

What surprises me is the nice smooth curve of decreasing use after a release. The top is always up and down but the bottom is always smooth. Interesting.

We start switching servers to testing towards the tail end of the release freeze when we need newer software, and leave those servers on stable after the release. I suspect lots of people do the same. (We also update all servers on oldstable to stable starting as soon as stable is finalized, whether they need it or not.)

Agreed with Russ – if you start a new project now you may start out your server on Wheezy, as it will probably not have many disruptive changes anymore and which will save you a dist-upgrade in the near future.

I’m not sure what you mean with this: “upgraded directly to testing, and switched back to tracking stable after the release”. As we all use codenames in our sources list, when you install wheezy and wheezy gets released, there’s no “switching back to tracking stable after the release”, but rather “continuing to use wheezy”.

I report popcon stats on my workstations, but almost never on my servers. My understanding of the goals of popcon was to better understand user preferences. A server that is: base + postfix + one of: [TeX, Samba, etc] + organization local/specific packages really isn’t very interesting from a reporting perspective. A workstation where I have tried to find a MP3 player or games that I like (and usually removed the ones I don’t), has more reporting value.

Have I mis-understood the intent of popcon, or are you trying to use the data in a way it wasn’t quite intended?

Warren: people running Debian on servers are Debian users too, popcon isn’t specific to desktop/workstation users. I would say you seem to have misunderstood the intent.

on which box did you run it?

on the popov (poopcon itself) I get “Can’t locate JSON.pm” and I do not think it is worth shuffling 2GB of popcon results across the net

may be it is worth adding that data.json excerpt to the git — it shouldn’t be large

it would be nice to get a plot

– scaled to 100% so we really look at ratios

– add “end of support” for each (well — lenny) release… I do not see the desired inflection line upon stopped support (lenny in Feb 2012), which suggests that people keep running older releases despite lacking security support (worrisome)

it is a very nice idea and we should assure of having new versions of popcon for each release! ;-) Thanks

@Yaroslav: data.json pushed to git

(Yes, I did copy those 2 GB of data to be able to generate the results)

FWIW — here would be the plot scaled to 100% for every date point:

http://www.onerussian.com/tmp/releases.png

To scale I just did

rel[, 2:7] <- 100*rel[,2:7] / rowSums(rel[,2:7])right after loading rel. Not sure if that is the R-kosher, but seems to work ;)Thanks again Lucas for sharing.